Investing in MLOps is crucial for modern data-driven organizations

We hear it every day. An MLOps or model team leader believes they have AI governance handled. Because they have:

- Logging

- Alerting

- Monitoring

They should 100% celebrate their investments in these elements - they are essential for well-built modeling systems; however, logging, monitoring, and alerting are only a small set of the many pieces of a well-governed system. Not another stack component, but a wide, additional layer that drives alignment, provides risk context, consumes information from existing processes, and delivers a real-time contextually appropriate aggregate abstraction of the business decisions, systems, and models that result in a prediction. This is the governance gap in MLOps system design.

MLOps is not governance

MLOps is the practice and components required to bring an ML model from idea to production from a technical perspective for technical stakeholders. ML platforms are designed and built from several components working in series to deliver a model into production for inference and monitor its performance. Observability and understanding of the process and components is an afterthought and, where present, only accessible and intelligible to technical folks. From a strictly technical perspective, the platform's goal is speed, efficiency, and performance. The practice and tooling in MLOps are drawn from software engineering and DevOps practices, particularly the ‘automate everything’ mindset.

Governance is about the counter to speed, efficiency, and automation - not because any of those qualities are inherently bad, but because well-built systems should mitigate undesirable outcomes. And sometimes going slower is the best way to get further. Good model governance embeds controls, visibility, and objectivity to achieve the most robust, performant, compliant, secure, and fair system possible. What the initial slowdown conceals is streamlining and enablement of downstream processes. Our DS and ML leaders don’t need to solve governance when done well. We can deliver context and tools that complement the model development lifecycle and remove the friction and painful administrative burden of future model reviews.

We all want the same thing - the best possible models in production as quickly as possible. But to date, we’ve not provided our business partners with the information they need to make decisions as quickly as we develop new models. As data scientists, we may not always be aware of broader system engineering or architectural considerations. Governance helps protect us from delivering projects with unforeseen risks, regardless of whether our business partners require this information.

Can MLOps cover the needs of AI governance?

Or vice versa? Not at all. The two play complementary roles that speak to different audiences, meet different needs, and neither is a subset of the other. Returning to our data engineering example, this is like asking if hiring an MLE or MLInfra person obviates the need for a data engineer. While there is overlap, expanded needs require specialist tools and skills. Let's examine some examples of the relationship between MLOps and governance.

Logging

Logging is generally concerned with creating a record of what happens in a system to provide a way to check on errors and understand system performance post-hoc. There are many similarities between the items we want to log from the MLOps and AI governance perspectives. Primary differences lie in the needs of the consuming audience, and thus the access patterns and the presentation layer must be considered. A DS or MLE can easily query a database or interact with a command-line tool and visualize the results using their favorite data visualization library. However, risk, compliance, and legal stakeholders would be unlikely to have the skills (or access) to do so.

Next is the breadth of values. In an MLOps system model, feature vectors and outcomes are logged, along with many details about the individual components that come together to allow the productive use of the modeling system. From build logs to systems logs, records of health checks, and various other quantities. All quantities previously mentioned are essential to capture - but they do not begin to meet the documentation requirements of a robust AI governance program from both presentation and completeness perspectives.

Logging, from the perspective of recording critical information about a model, begins quite a bit before the feature vector of a prediction is captured in a well-governed system. The gathering of information begins at project inception and flows through model decommission - and all elements must be legible and accessible to our non-technical stakeholders.

Let’s look at a concrete workflow as an example. A regulatory body has inquired about an underwriting decision made in July of 2023. It is December of 2026. Your company’s legal, audit, compliance, and risk teams have been working together to prepare the documents required to respond to the inquiry. They need precise information on the model.

As a leader of the data science organization, you and your people are prepared to answer questions about your model’s and system’s performance, and you are confident your data scientists made the right decisions during model build and training. You ask the line manager in underwriting data science to pull the build logs and relevant model documentation for review before the official ask comes through.

The first issue is that the former manager in Underwriting DS has taken a new role in a new division and has a major project due.

Beyond the first people and priorities challenge, some requested items are challenging to obtain when the ask comes through.

A few examples:

- Which version of the model was live across a date range, and if more than one, how were they different?

- What data source was used to build the training data set, and how was it permissioned at the time of training?

- Where is the original training data set, and how did the feature engineering process address the issue of potential proxy bias?

- How many issues were flagged with the model while this version was live? Where is the record of issues resolution, how many were high priority, and who resolved them?

- How many decisions were processed by the model across this period? What was the outcome distribution? How were outcomes distributed across feature vectors similar to the decision in question? And if the current running model is different, how are the distributions of the subsequent models the same or different?

- How often have the underlying data sources and feature transformation pipelines changed from the model in question to the currently running model?

- What is the version history of all production data pipelines that handle pre and post-processing logic across the model history?

- Please stand the model in question back up and run the historic feature vector against the model to audit the performance against our logged records. What is the output?

- Can you explain the model selection process? How was model complexity weighed, eg, if the model is a GBM, why was that selected over a linear model?

Unfortunately, the data scientist that initially built the model has left the company. This leaves the Underwriting DS manager digging through old emails, slide decks, and system logs for clues.

The result is that many unplanned hours are spent in document retrieval and context building around the raw answers (in systems logs, monitoring systems, etc). The VP in the manager’s new area is neither happy nor amused with the delay and deprioritization of their recent work, and the manager is burnt out and overwhelmed.

Now the fun starts. The auditor (or regulator) makes the following statement:

“We’d like to see evidence of your data selection criteria”

Can that be done, given staffing changes and the passing of time? Where was it captured, and how hasthe present practice prepared your organization for the inquiry?

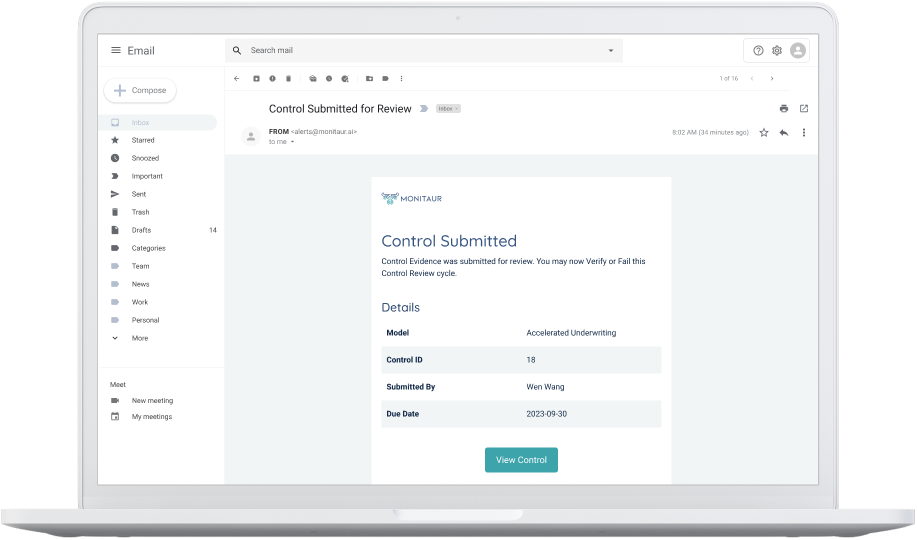

In a mature AI governance environment, the answers posed above are self-serviceable by business partners. Given an exceptionally robust system, a business user can also spin up an arbitrarily old model and re-run the transaction against it - to verify that the decision is as recorded. The business user can also spin up other models that have been since commissioned/decommissioned to run the historic feature vector against - what prediction comes from model v0.3 vs. v1.2 vs. v2.3?

If an old model was found problematic, do our models since (and present model) have the same issues? Can we demonstrate that to a regulator?

Monitoring

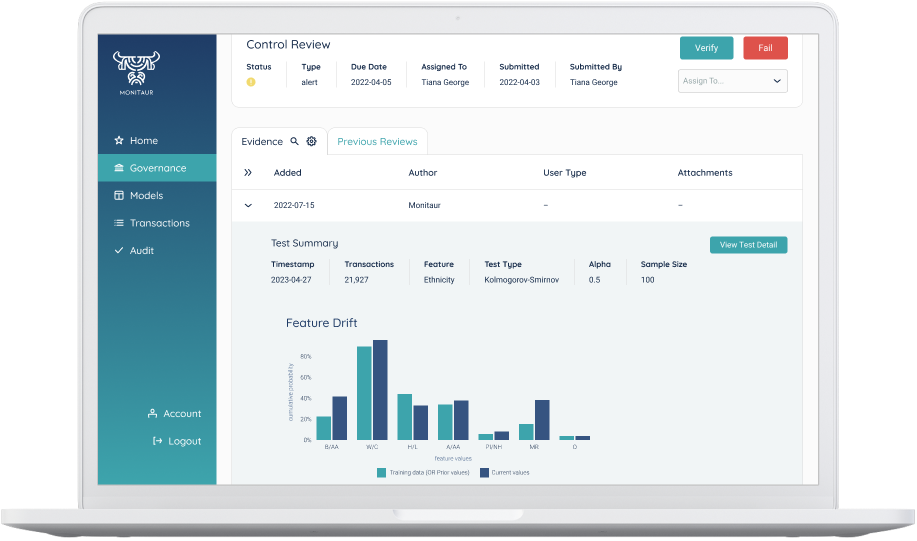

The monitoring components of MLOps and AI governance systems are the most similar elements we’ll discuss, though even here, strong MLOps practices do not obviate good governance. For each, there is concern with model performance at present and over time, training/serving skew, and catching anomalies that could flow through the system to produce undesirable outcomes. Depending on the maturity of the individual MLOps practice, monitoring may be done ad-hoc in a notebook without third-party evaluation or propagated into a dashboard with various visualizations.

Table stakes for these platforms (as there are many) are feature and outcome distributions, the ability to run statistical tests against the collected data, and the ability to view model performance against sub-segments of the feature vectors.

The consumer of these dashboards is typically a data scientist tasked with performance monitoring the models they built or a team member in an allied role - perhaps a data engineer or analyst. The notion of a non-technical user needing (or wanting) to use these tools is uncontemplated. Model outcome and performance questions asked by business leaders are filtered through the technical folks, usually via IT tickets, who must synthesize an answer from the data and create a story legible to a business user.